I just created my very first local AI agent using:

Here’s what agent-hello does:

# main.py

import requests

def talk_to_llama(prompt):

res = requests.post("http://localhost:11434/api/generate", json={

"model": "llama3",

"prompt": prompt,

"stream": False

})

return res.json()["response"]

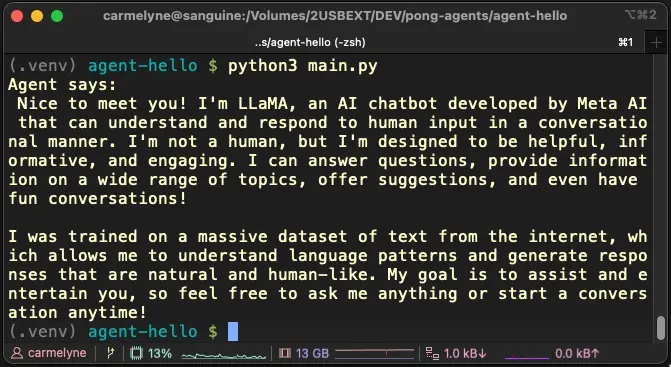

print("Agent says:\n", talk_to_llama("Hello, who are you?"))

That’s it. A little hello agent on my M1 Mac. It’s now a sovereign little AI machine.

How I Got It Running

1. Installed Ollama

2. Pulled a model

ollama pull llama3

3. Set up a Python virtualenv

mkcd agent-hello

python3 -m venv .venv

source .venv/bin/activate

pip install requests

4. Ran python3 main.py — and boom, the agent replied.

python3 main.py

Why This Matters

-

Privacy — Zero data leakage All inference runs on‑device. Your prompts, code snippets, and business secrets never touch an external server. This eliminates the compliance nightmare of accidental data exfiltration.

-

Cost — Token‑free, cheap, predictable No per‑token fees, no hidden bandwidth charges. Once the model is on your SSD (or external drive), you pay only electricity and the one‑time storage cost—orders of magnitude cheaper than any managed API.

-

Sovereignty — Control without downtime You own the stack. No reliance on third‑party SLA, rate limits, or unexpected price hikes. Your agent stays up even if the cloud goes down, and you can patch, upgrade, or fork it at will.

In short, a local AI agent turns the “pay‑per‑call” model on its head: you get powerful LLM capabilities, full control, and a predictable budget—all from a single M1 Mac.

END_OF_REPORT 🌿✨