Have you ever found yourself in a deep, long-session conversation with an AI, only to feel like it’s slowly veering off course? That what started as a crisp, focused discussion has become muddled with small, persistent errors?

This deterioration isn’t a bug—it’s an inevitable feature of extended AI interaction. And without an active approach to manage it, the quality of your collaboration will degrade, sometimes spectacularly.

I learned this the hard way during a complex technical writing project. Three hours in, I realized the AI was building arguments on “facts” I’d never mentioned and steering us toward topics we’d explicitly decided to avoid. The shared mental model we’d built had become corrupted, and I was unknowingly complicit in the corruption.

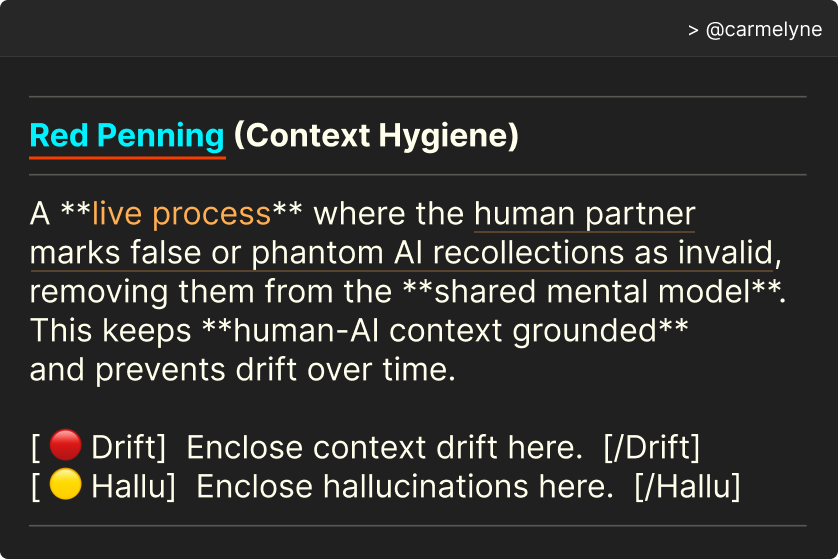

This experience led me to develop what I call “Red Penning”—borrowing the term from traditional editors who mark up manuscripts with red ink. It’s a live, active process where you explicitly flag and remove false or drifting AI statements from your shared conversation context.

It sounds simple. It is simple. And it’s absolutely essential for anyone serious about human-AI collaboration.

Why This Can’t Wait

Before diving into the how, let’s address the why. You might think, “Can’t I just say ‘no, that’s wrong’ when the AI makes a mistake?”

Here’s the problem with that approach:

Weak signals create weak corrections. When you simply say “that’s wrong,” the AI has to guess what went wrong. Was it a factual error? A misunderstanding of your intent? A forgotten detail? This guesswork often leads to overcorrection or, worse, the same type of error recurring later.

Errors compound exponentially. The most dangerous part of AI hallucinations1 and context drift2 is their snowball effect. A small fabrication early on becomes the “established fact” that all subsequent outputs build upon. By the time you notice, you’re not just dealing with one error—you’re dealing with an entire corrupted foundation.

Passive collaboration is a losing strategy. The most effective AI users understand they’re co-pilots, not passengers. Active context management isn’t just good practice; it’s the difference between getting useful output and wasting hours on sophisticated nonsense.

The Red Penning Framework

In my experience, the issues that corrupt our shared mental model fall into two distinct categories. Giving them clear labels makes correction more effective and prevents the AI from misinterpreting your feedback.

1. Context Drift [🔴 Drift]

This happens when the AI deviates from the established topic, misinterprets a key detail, or loses the thread of conversation. The AI isn’t making things up—it’s just wandering off the path.

Example: You: “Let’s focus on optimizing our database queries for the checkout process.” AI: “Absolutely. Since we were discussing user authentication earlier, we should start by reviewing login performance.” You: [🔴 Drift] We’ve moved on from authentication. We’re focusing specifically on checkout database performance now. [/Drift]

2. Hallucinations [🟡 Hallu]

This is the outright fabrication of facts, sources, or previous conversation points. These phantom memories are the most dangerous form of context corruption and must be purged immediately.

Example: AI: “As you mentioned in your opening brief, the API response time increased by 40% last month.” You: [🟡 Hallu] I never provided that statistic, and I’m not sure it’s accurate. Please disregard that claim entirely. [/Hallu]

Getting Started with Red Penning

Start immediately. Don’t wait for the “big” errors. Flag small drifts and minor fabrications from the beginning. This trains both you and the AI to maintain higher standards throughout the session.

Be surgical, not harsh. You’re not criticizing the AI—you’re providing precise diagnostic information. Think of yourself as a debugging partner, not a harsh critic.

Stay consistent with the tags. The emoji-based system works because it’s visually distinct and categorically clear. Stick with the format even when you’re tempted to just say “no, that’s wrong.”

Trust but verify. As conversations get longer, actively scan AI responses for these two patterns. The longer the session, the more vigilant you need to be.

When Red Penning Matters Most

This technique is particularly crucial for:

For quick, single-question interactions, the overhead isn’t worth it. But for any substantial collaboration, Red Penning transforms the quality of your output.

Clean saved memories, too. If you’re using an AI with persistent memory, treat that memory like a garden. Unpruned notes—half-truths, old assumptions, small misreads—become weeds that distort every future session. Regularly review and clear inaccuracies so drift doesn’t just happen within a session, but across them. Without this hygiene, yesterday’s errors quietly set the stage for tomorrow’s confusion.

The Bottom Line

The most effective AI users understand something crucial: great collaboration requires active maintenance. You can’t just set it and forget it.

Red Penning isn’t just a technique—it’s a mindset. It’s the recognition that great human-AI collaboration requires the same attention to detail and active engagement that great human-human collaboration demands.

So the next time you start on a substantial project with your AI partner, reach for your virtual red pen. Your future self will thank you for the clarity, and your AI partner will thank you for the guidance.

Together, you’ll build something neither of you could have created alone.

- AI Hallucinations

https://en.wikipedia.org/wiki/Hallucination_(artificial_intelligence) ↩︎ - How to Mitigate AI Model Drift in Dynamic Environments

https://www.stackmoxie.com/blog/how-to-mitigate-ai-model-drift ↩︎