Letting AI code while I do QA?

I am testing a structured workflow for AI‑assisted dev.

I’ve been in webdev for years, wearing many hats—webmaster, UI/UX designer, full‑stack developer, QA, DevOps, tech SEO, and project manager.

That also means I see myself as a crappy coder—but one who understands the full architecture of a developed app. That’s why I’ve been exploring coding assistants to help me create apps from scratch. My assumption? Let the AI handle the coding while I focus on QA.

Directory Structure for my AI Assisted Dev

Directory Structure for my AI Assisted Dev

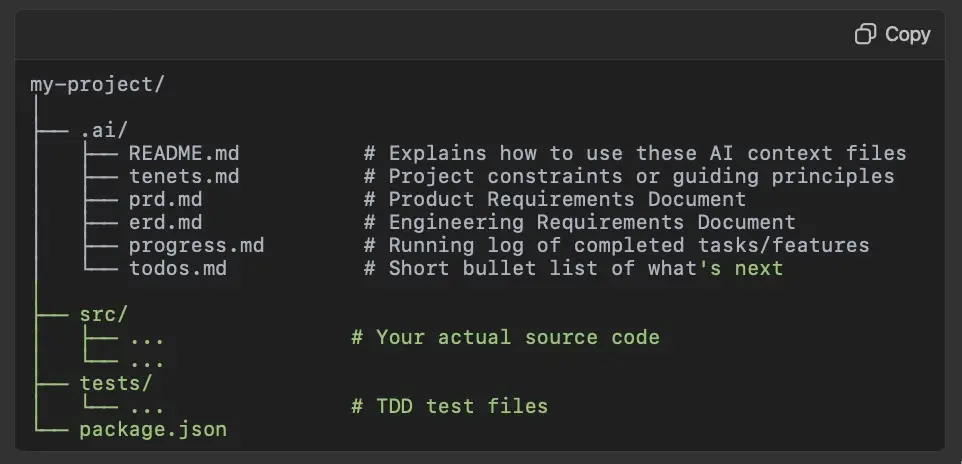

Directory Structure

To keep things structured, I’ve set up a dedicated .ai/ directory—a context hub for AI‑assisted development. It holds key docs like:

AI Tenets

📌 tenets.md

Dev Principles & Constraints. It could hold your overarching guidelines, brand voice, code style preferences, etc.

- Philosophical Tenets

- “Always prioritize user privacy.”

- “Respect the user’s time with fast load times.”

- Tech Tenets

- “Keep dependencies minimum to reduce bloat.”

- “Follow TDD at all times”

- “Follow this coding style: code_style “

- Design Tenets

- “Maintain consistent brand styling.”

- “Accessibility is non‑negotiable.”

Product Req Docs

📌 prd.md

Product Requirements Document

- Features

- User Stories

- Acceptance Criteria

- Low Fidelity Wireframes

Engineering Req Docs

📌 erd.md

Engineering Requirements Document

- Architecture & Data Flow

- API Endpoints

- Database Schema

- Performance & Scalability

- Testing & QA

- Deployment & DevOps

- Technical SEO/AIO)

Progress

📌 progress.md

Chronicle the tasks or features that have been completed, plus any relevant notes or decisions made along the way.

It’s a running Changelog (tracking built features & key decisions).

Todos

📌 todos.md

Living Backlog (next steps & new ideas)

The goal? Bridge the gap between planning & dev—so AI coding assistants don’t just generate code in a vacuum but actually follow a structured dev process.

But even with all this structure, AI still struggles with consistency. I’m now figuring out how to handle cases where AI needs to transpile code & follow prompts reliably.

As you know, coding assistants have limited contextual awareness, meaning they often:

❌ Miss dependencies ❌ Misinterpret instructions ❌ Lose track of previous logic

💡 I’d love to hear from others trying this approach!

- How do you get AI to transpile code reliably (e.g., Sudolang) across different languages or frameworks?

- What’s the best way to keep prompts structured so AI maintains consistency?

- Have you found an effective workflow to extend AI’s contextual memory for larger projects?

I’m just trying to figure this out too—curious to hear what’s worked (or hasn’t) for you.

END_OF_REPORT 🌿✨