Table Of Contents

Thirty-five days ago, I asked myself:

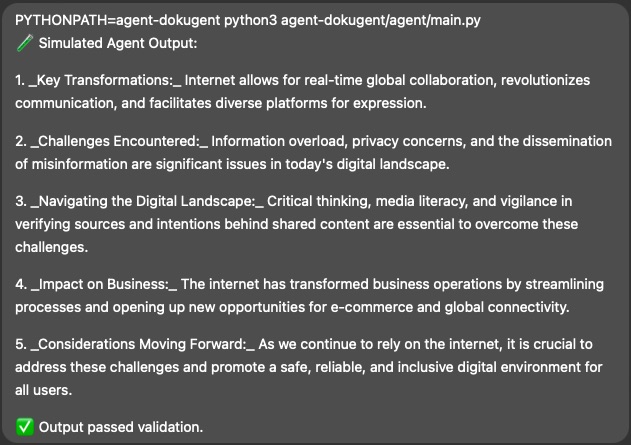

“What if we could define AI agents like packages with plans, constraints, and versioning?”

No wrappers.

No black boxes.

Just structured, traceable, testable behavior — written in plain Markdown, running on local models.

What I Built (With ChatGPT as my pair)

And yes, it passed validation.

Why This Matters

It’s not a simple LLM project.

This AI Agent project is:

This isn’t just output. It’s from a signed, versioned, certifiable agent.

GDPR-aware, ISO-aligned, and handshake-ready anytime.

You’d know who wrote it, why it ran, and how old it is.

That’s infrastructure.

✅ Agent Certified • 🔐 GDPR-Aware • 📜 ISO-Aligned • 🧾 Traceable by Design

What’s Next

PS:

Our Dokugent CLI Dev Log 003 was co-written by ChatGPT (it was its turn).

I’m still not sure if this was a hyperfocused build streak or the start of something serious, but I do know this:

The CLI runs.

The agents follows the structure.

And somehow… it feels like the beginning of a system I can trust.

— carmelyne